Video quality has been improving in recent years, and High Dynamic Range (HDR) is one of the most exciting advancements. Unlike Ultra-HD, which provides a more precise picture, and resolution, which increases the number of pixels used for an image, HDR makes images more vivid and lifelike by using existing hardware.

HDR achieves this by using metadata in the video file to specify shades of brightness and color more precisely than ever before. As a result, TV screens display better, more realistic images. Most TVs produced in recent years have the hardware to produce HDR images, making the technology widely accessible.

The world of high dynamic range is new and exciting. Although the technology is only just beginning to saturate the market, Amazon Prime Video, Netflix, and a number of blockbuster films are already embracing it. It is expected that HDR will soon become the standard for most content produced, and nearly any device with an HD screen will be designed to view it.

Table of Contents

What Is HDR and How Does It Work?

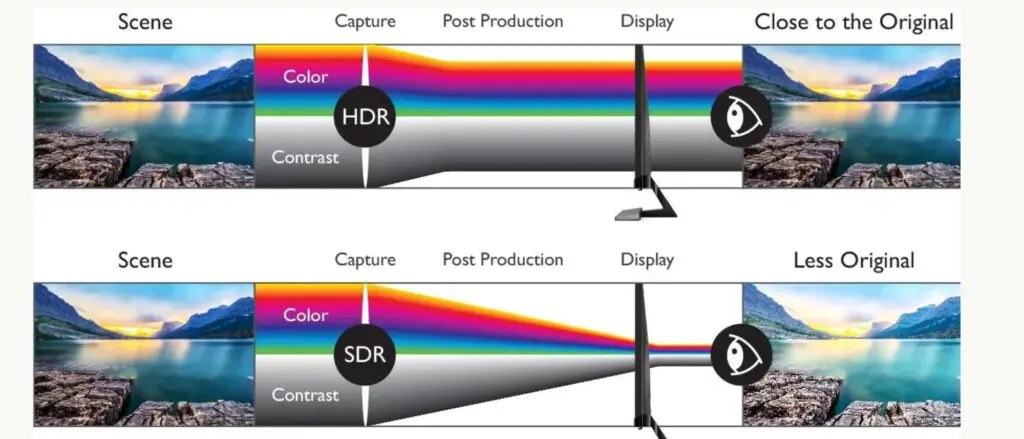

To understand HDR, let’s start with how a film or television show is produced. The director or producer has a vision for how the content should look, and the video is shot and edited in post-production. During this process, the precise colors and brightness levels are set.

Before HDR, the video signal would be sent to the television, and the screen would display the content based on its hardware and color abilities, often resulting in a different look than intended. Some brands, such as Samsung, offered advanced post-processing changes that would further shift the colors and brightness.

With HDR, however, something new is happening. Instead of the television’s limitations or post-processing driving how the image looks, the content producer can now specify exactly how bright a pixel should be and what color it should be. This information is embedded in the video source as metadata.

To make use of this metadata, a receiver or media device is required to pass the information to the screen, and the television must be capable of interpreting the data and producing the specified levels of brightness and colors. With these enhancements, HDR offers a new level of realism and vividness to the viewing experience.

Important Note about HDR

It’s important to understand that HDR is not the same as simply increasing the brightness and contrast on your display device. While increasing contrast does change the brightness and darkness of some colors, it does not expand the range of colors your display device can show. In contrast, HDR uses metadata to specify shades of brightness and color beyond what you can modify in the screen settings.

When you adjust contrast on your display device, the device itself decides how to apply it. HDR, on the other hand, is applied by the film’s director and editorial team. Different directors may choose different ranges of brightness for different scenes, or apply HDR only to certain scenes.

HDR is a tool that content producers use to enhance your visual experience in a specific way, and there are no settings to adjust beyond turning on your display device’s HDR capability. This simplicity is a huge added benefit, as once HDR is on, everything is pre-adjusted and there’s nothing else for you to worry about.

Implementing HDR is a straightforward standard, and there are many modestly priced panels available that support it, such as the Samsung SAMSUNG 43-Inch Class Crystal 4K UHD AU8000 Series HDR and the TCL 65″ Class 5-Series 4K UHD QLED Dolby Vision & Atmos, both of which are available on Amazon.

The Different Versions of HDR

When looking for a new HDR device, there are various options to consider. The different versions of HDR available each have their own unique benefits, but unfortunately, they are not all compatible with each other.

The most commonly used HDR formats include Dolby Vision, HDR10, and Hybrid Log-Gamma (HLG). It’s crucial to ensure that your display device and media source are both compatible with the same version of HDR. Otherwise, the device won’t be able to interpret the HDR information, and you’ll be left with a standard dynamic range display.

However, it’s worth noting that as time passes, more display devices are expected to become compatible with multiple versions of HDR. Additionally, it’s anticipated that future media content will feature multiple versions of HDR, making it easier to find compatible devices.

While it may seem daunting to choose from different HDR versions, each format has its own advantages and disadvantages. By doing some research and comparing the features, you can find the version that best suits your needs.

Dolby Vision

Currently, Dolby Vision reigns supreme when it comes to image quality. The reason for this is that Dolby Vision utilizes ‘dynamic’ metadata, unlike other standards. While static metadata informs your display device on how to show your media over the course of a movie or show, Dolby Vision allows for metadata to be adjusted on a shot-by-shot or even a frame-by-frame basis.

However, it’s important to note that Dolby Vision is a proprietary format. This means that content producers and device manufacturers must pay a licensing fee to utilize Dolby’s technology. Consequently, fewer media and display devices are compatible with Dolby Vision due to the associated cost.

Despite the cost barrier, Dolby Vision’s superior image quality has made it a popular choice for certain consumers who prioritize image quality above all else. Nevertheless, it remains to be seen whether Dolby Vision’s dominance will continue in the long run, as other standards may eventually catch up and offer comparable image quality at a lower cost.

HDR10 and HDR10 Plus

If you’re looking to enjoy as much HDR content as possible, HDR10 is currently the preferred format. Unlike Dolby Vision, HDR10 is an open format, so no licensing fees are required for its use. This results in a wider availability of content and display devices with HDR10 support, but it also means that there is less control over how it is implemented.

While Dolby Vision sets a standard for content producers and device manufacturers to ensure consistent image quality across different devices, HDR10 does not have the same oversight. As a result, HDR10-compatible devices may display HDR content differently.

It’s worth noting that HDR10 employs static metadata, which means that scenes with darker or brighter elements cannot be adjusted on the fly. This can result in muted colors or lack of detail in certain areas of the image. However, HDR10+ has addressed this issue by incorporating dynamic metadata, allowing for scene-by-scene adjustments. Nonetheless, HDR10+ has yet to achieve widespread adoption in the market.

Hybrid Log-Gamma

Hybrid Log-Gamma (HLG) is a newer HDR format that offers a unique advantage over Dolby Vision and HDR10 – it is backward-compatible. This means that if you have HLG content and only an SDR display device, the content will display with image quality as good as if you had bought the content in standard dynamic range.

HLG was co-developed by the BBC and Japan’s NHK, and it is currently primarily intended for broadcast TV to avoid having to make multiple channels for the same content, as some do with high and standard definition broadcasts.

While the backward compatibility of HLG is an obvious advantage, it does come with a drawback. HLG lacks the same range of darkness and brightness as Dolby Vision and HDR10, which can result in less vibrant colors and less detail in certain areas of the image. Nonetheless, HLG may still be a viable option, especially if you have multiple display devices but do not intend on upgrading all of them.

HDR Standards: Choosing Wisely

When it comes to HDR standards, HDR10 is the most common one available currently. To ensure that you get the best performance out of your device, it’s advisable to go with an HDR10 compatible display device.

However, Dolby Vision is considered superior due to Dolby’s tight control over its implementation. If you have multiple display devices at home but only plan to upgrade some of them, HLG can be a good option.

In a few years, it’s expected that most display devices and media will be compatible with multiple formats. So, it might be wise to hold off on adopting HDR until the technology becomes more readily available and affordable.

Despite this, many media and devices have already switched to HDR. Therefore, it may become a standard sooner than you think.

How To Use HDR?

To use HDR, you only need three things: HDR content, an HDR-compatible display device (such as a TV or projector), and compatibility with HDR for all devices in between.

It’s important to note that HDR only works with HDMI, and to get the most out of it, you will need a Premium HDMI cable (check our article on how to add more hdmi ports to a TV). You should also ensure that HDR is turned on for all of your devices. Once you’ve done that, you’re ready to go.

Here are some simple instructions for enabling HDR on some of our favorite devices:

LG

To enable HDR on your LG TV, follow these steps:

- Click the “Home” button on your remote and scroll to “HDMI.”

- Select “Settings” and choose “Advanced” from the following menu.

- Select “Picture” and then “HDMI ULTRA DEEP COLOR.”

- Turn on this feature and restart your TV.

Sony TVs

To enable HDR on your Sony TV, follow these steps:

- Go to “Home” and then “Settings.”

- Select “External Inputs” and then “HDMI Signal Format.”

- Choose the SHIELD input for HDMI and select “Enhanced Mode.”

- Your TV should reboot automatically.

Windows 10

To enable HDR on Windows 10, follow these steps:

- Click the “Start” button in the bottom left-hand corner of the screen.

- Select “Settings,” then “System,” and “Display.”

- If you have multiple display devices, choose the HDR-compatible display under “Select and Rearrange Displays.”

- Select “Windows HD Color Settings.”

- Under “Display Capabilities,” ensure that it says “Yes” next to “Play HDR Games and Apps.”

- Below that, turn on “Play HDR Games and Apps” by selecting the switch.

Xbox One X

To enable HDR on your Xbox One X, follow these steps:

- Press the Xbox button to open the Xbox guide.

- Scroll down to the “Settings” tab and select “All Settings.”

- From here, select “Display & Sound,” and then “Video Input.”

- Next, choose “Video Modes.”

- Make sure that both the “Allow 4K” and “Allow HDR” checkboxes are checked.

PlayStation 4

To enable HDR on your PS4, follow these steps:

- Using a Premium HDMI cable, connect the console to the correct HDR-compatible HDMI port on your display device. Ensure that it is connected directly to the display device and not to a receiver or switch box.

- On the PS4, go to “Settings,” then “Sound and Screen,” and then “Video Output Settings.”

- In the Video Output Settings menu, set the “HDR” and “Deep Colour” output settings to “Automatic.”

- The PS4 also allows you to calibrate your HDR settings for a customized viewing experience. From the Video Output Settings menu, select “Adjust HDR” and follow the instructions given on-screen.

Final Thoughts

HDR technology is a game-changer in the entertainment industry. The true-to-life image it produces creates a vivid viewing experience that has never been seen before. With the deep blacks and bright whites, images come to life, making the sun glare, shadows, and textures more prominent.

Not all HDR-capable devices can display HDR media due to the different versions of HDR available. To get the most out of HDR technology, choosing the right device for the content you want to watch is crucial.

In the future, most display devices and gaming consoles are likely to support multiple HDR formats. It may be wise to wait until more affordable devices that can support various formats become widely available before investing in HDR-capable devices.

Setting up your device to display HDR content is usually a straightforward process, and it requires a few tweaks to your device’s settings. However, it’s essential to consult the device manually before setting it up, as some devices may not be compatible with all HDR-capable devices, such as the PlayStation 4.

Although HDR technology is still in its early stages, it will likely continue to improve over time. There’s no reason to wait for improvements to enjoy the stunning color ranges that HDR technology offers. You can get in on the action now and experience your favorite movies, shows, and games in a whole new light.

Related Q&A

What is the difference between HDR and increasing brightness and contrast on a display device?

HDR is not the same as increasing brightness and contrast on a display device. Contrast only increases brightness and darkness of some colors, while HDR specifies shades of brightness and color beyond what you can modify in the screen settings. HDR is applied by the film’s director and editorial team, and there are no settings to adjust beyond turning on the device’s HDR capability.

What are the different versions of HDR?

The most common versions of HDR are Dolby Vision, HDR10, and Hybrid Log-Gamma (HLG). Each format has distinct advantages and disadvantages. Different formats of HDR are not compatible with each other.

Which version of HDR has the best image quality?

Dolby Vision has the best image quality because it has ‘dynamic’ metadata. This allows for metadata to be adjusted between shots or even frame-by-frame.

What is the difference between HDR and Ultra-HD?

Ultra-HD gives you a more precise picture and drives up the number of pixels used for an image. HDR produces better, more lifelike images by specifying shades of brightness and color.

Do most TVs sold today have the hardware to produce HDR images?

Yes, most TVs sold over the last few years and in production today have the hardware to produce HDR images.

Is HDR a tool that content producers use to enhance visual experience?

Yes, HDR is a tool content producers use to enhance visual experience in a specific way.

What is metadata?

Metadata is information about the image data that is embedded in the video source.

Do you need a specific type of device to use HDR?

Yes, you need a device that can pass the metadata on to the screen from the receiver and a TV that is capable of interpreting that data.

Is HDR a simple standard to implement?

Yes, HDR is a simple standard to implement, and many devices are available at modest prices.

What is Hybrid Log-Gamma (HLG)?

Hybrid Log-Gamma (HLG) is one of the most common versions of HDR.

What happens to the video signal path with HDR?

Before HDR, the signal would go to the Television, and based on its hardware and color abilities, it would look different. With HDR, the content producer specifies the brightness and color of each pixel and this information is embedded in the video source.

What are some companies that use HDR?

Amazon Prime Video and Netflix use HDR, and a number of blockbuster films use it as well.

Will most display devices eventually be compatible with multiple forms of HDR?

Yes, it is expected that most display devices will eventually be compatible with multiple forms of HDR.

Does HDR change the color of the image?

HDR does not change the color of the image, but it specifies the brightness and color of each pixel more precisely.

Latest Posts: